Canton 3.3 Release Notes for Splice 0.4.0

by Curtis Hrischuk

May 28, 2025

by Curtis Hrischuk

May 28, 2025

The Canton 3.3 release notes for Splice 0.4.0 are provided below. It highlights the new features in Canton 3.3 that focus on easing application development, testing, upgrading, and supporting the long-term stability of Canton APIs. The high level structure is:

- Impact and Migration Overview

- Daml Language and SDK

- Canton Ledger API

- Canton Network Quick Start

- The Canton Platform

- Participant Query Store

- Other Changes

Introduction

The new release of Canton 3.3 has several goals:

-

To promote application development for Canton Network's Global Synchronizer by providing stable public APIs with minimal future changes.

-

To support Splice features.

-

To make application upgrades easier.

-

To enhance operational capabilities.

-

Additional small improvements.

If you are interested in migrating your Global Synchronizer application to Splice 0.4.0, please review the blog post "Canton / Daml 3.3 Release Notes Preview," which describes this in detail. This blog post expands on that one with coverage of new features that applications can take advantage of or that operators can use in managing their validator.

Although much of the discussion here is about the Canton Network or Splice, it is still relevant to application developers for private synchronizer or multi-synchronizer applications. For example, Canton 2 private synchronizer application providers can begin to explore Canton 3 with this release. Please note that deploying private synchronizer or multi-synchronizer applications to production is recommended for a future Canton release.

Technical Enablement Updates

A new technical documentation site for Daml and Canton 3.x is available. This new site contains our latest technical references, explanations, tutorials, and how-to guides. It also offers improved design, navigation, search, and clearer documentation structure. The new site organizes documentation by Canton Network use case (Overview, Connect, Build, Operate, Subnet) to make relevant information easier to find and consume.

Our Technical Solution Architect Certification course, released earlier this year, provides in-depth coverage of best practice architectural considerations when developing Daml applications for Canton, equipping users with the knowledge needed to design scalable, secure, and high-performance solutions. Sign up for a free account on our LMS platform to get started.

Explore the new docs and consider enrolling to deepen your expertise with Daml and Canton.

Impact and Migration Overview

There are two types of application updates discussed in this blog post. The first are updates needed to run on a network that has migrated to Splice 0.4.0. The second set is for deprecated features that are backwards compatible in Splice 0.4.0, but the backwards compatibility will be removed in Splice 0.5.0.

For reference, the sections with update or migration information for application, development process, or operations are listed below:

- Smart Contract Upgrade (SCU)

- Standard library changes

- Daml compiler changes

- Use Canton's Error Description instead of Daml Exceptions

- JSON API v2

- Identifier Addressing by-package-name

- Application_id and domain are Renamed

- offset is now an Integer

- event_id is changed to offset and node_id

- Interactive Submission

- Universal Event Streams

- Ledger API Interface Query Upgrading

- Automatic Node Initialization and Configuration

- Deduplication Offset

- Topology-Aware Package Selection

- Renamed Canton console commands

- Signing Key Usage

- Removed identifier delegation topology request and `IdentityDelegation` key usage

- Topology Management Minor Breaking Changes

- DAR and Package Services

- Refactored synchronizer connectivity service

- Participant Query Store

- Other Changes

Daml Language and SDK

Smart Contract Upgrade (SCU)

Smart Contract Upgrade (SCU) allows Daml models (packages in DAR files) to be updated transparently. This makes it possible to fix application bugs or extend Daml models without downtime or breaking Daml clients. This feature also eliminates the need to hardcode package IDs, which increases developer efficiency. For example, you can fix a Daml model's bug by uploading the DAR that has the fix in a new version of the package. SCU was introduced in Canton v2.9.1 and is now also available in Canton 3.3.

This feature is well-suited for developing and rolling out incremental template updates. There are guidelines to ensure upgrade compatibility between DAR files. The compatibility is checked at compile time, DAR upload time, and runtime. This is to ensure data backwards compatibility and forward compatibility (subject to the guidelines being followed) so that DARs can be safely upgraded to new versions. It also prevents unexpected data loss if a runtime downgrade occurs (e.g., a ledger client is using template version 1.0.0 while the participant node has the newer version 1.1.0).

You may need to adjust your development practice to ensure package versions follow a semantic versioning approach. To prevent unexpected behavior and ensure compatibility, this feature enforces unique package names and versions for each DAR being uploaded to a participant node, and that packages with the same name are upgrade-compatible. It is no longer possible to upload multiple DARs with the same package name and version. Please ensure you are setting the package version in the daml.yaml files and increasing the version number as new versions are developed.

The 3.x documentation for SCU is in preparation but not yet available. However, the documentation from 2.x is largely applicable and available here with the reference documentation available here; please ignore the protocol version and the Daml-LF version details.

Daml Script

Daml Script provides a way of testing Daml code during development. The following changes have been made to Daml Script:

- The daml-script library in Canton 3.3 is Daml 3’s name for daml-script-lts in Canton 2.10 (renamed from daml3-script in 3.2). The old daml-script library of Canton 3.2 no longer exists.

- allocatePartyWithHint has been deprecated and replaced with allocatePartyByHint. Note that parties can no longer have a display name that differs from the PartyHint. This mirrors the Ledger API updates.

- daml-script is now a utility package: the datatypes it defines cannot be used as template or choice parameters, and it is transparent to upgrades. It is strongly discouraged to upload daml-script to a production ledger but this change ensures that it is harmless.

- daml-script exposes a new prefetchKey option for submissions.

Daml Standard Library

The Daml standard library is a set of Daml functions, classes and more that make developing with Daml easier. The following updates have been made to it:

- New Bounded instances for Numeric and Time.

- TextMap is available.

- Added Map.singleton and TextMap.singleton functions.

- Added List.mapWithIndex.

- Added Functor.<$$>.

- Added Text.isNotEmpty.

Daml Language Syntax Change

There is a new alternative syntax for implementing interfaces: one can now write implements I where ... instead of interface instance I for T where …

Daml Assistant

The Daml Assistant is a command-line tool that does a lot of useful things to aid development. It has the following updates:

- Fixed an issue with daml build –all failing to find multi-package.yaml when in a directory below a daml.yaml

- The daml package and daml merge-dars commands were removed since they were deprecated previously.

- The daml start command no longer supports hot reload as this feature is incompatible with Smart Contract Upgrades.

- Added a new multi-package project template called multi-package-example to the daml assistant.

Daml Compiler

The Daml compiler has the following updates:

Some diagnostics during compilation can be upgraded to errors, downgraded to warnings, or ignored entirely. The --warn flag can be used to trigger this behaviour for different classes of diagnostics. The syntax is as follows:- Disable all diagnostics in diagnostic-class with --warn=no-<diagnostic-class>

- Turn all diagnostics in diagnostic-class into warnings with --warn=<diagnostic-class>

- Turn all diagnostics in diagnostic-class into errors with --warn=error=<diagnostic-class>

- Run daml build --help to list all available diagnostic classes, under the documentation for the -W flag.

- This release has the following diagnostic classes:

- deprecated-exceptions

- This class applies to diagnostics about user-defined exceptions being deprecated.

- crypto-text-is-alpha

- This class applies to diagnostics about using primitives from DA’s Crypto.Text modules, which are still alpha and subject to updates.

- ledger-time-is-alpha

- This class applies to diagnostics about using new ledger time assertions such as assertWithinDeadline and assertDeadlineExceeded, which are still alpha and subject to updates.

- upgrade-interfaces

- This class applies to diagnostics about defining interfaces in separate packages from their implementations and from templates. This is discouraged since exceptions are not upgradable, and thus will make the templates not upgradable.

- upgrade-exceptions

- This class applies to diagnostics about defining exceptions and templates in separate packages.

- upgrade-dependency-metadata

- This class applies to diagnostics about dependencies with erroneous metadata.

- upgraded-template-expression-changed

- This class applies to diagnostics about expressions in templates that should remain unchanged between upgrades, such as signatories and keys.

- upgraded-choice-expression-changed

- This class applies to diagnostics about expressions in choices that should remain unchanged between upgrades.

- deprecated-exceptions

- This release has the following diagnostic classes:

-

-

- could-not-extract-upgraded-expression

- This class applies to diagnostics about expressions in templates and choices that could not be found to validate against changes.

- unused-dependency

- This class applies to diagnostics about dependencies that are listed as dependencies but are unused in the package. These should be removed from the daml.yaml, and will not be included in the DAR.

- upgrades-own-dependency

- This class applies to diagnostics about a package that depends on a different, upgradeable version of itself.

- template-interface-depends-on-daml-script

- This class applies to diagnostics about a package which depends on daml-script, which is not recommended for upgradeable packages.

- template-has-new-interface-instance

- This class applies to diagnostics about adding a new interface instance to an existing template.

- could-not-extract-upgraded-expression

- The following three flags have been replaced by the new --warn flag:

- The --warn-bad-exceptions flag has been superseded by --warn=upgrade-exceptions

- The --warn-bad-interface-instances flag has been superseded by --warn=upgrade-interfaces

-

Code Generation

Code generation provides a representation of Daml templates and data types in either Java or TypeScript. The code generation has the following updates:

- In typescript-generated code, undefined is now accepted by functions expecting an optional value and is understood to mean None.

- Typescript code generation no longer emits code for utility packages: these packages don’t expose any serializable type and thus will never be interacted with through the ledger API.

- Java codegen now exposes additional fields in the class representing an interface. Next to TEMPLATE_ID there is INTERFACE_ID and next to TEMPLATE_ID_WITH_PACKAGE_ID there is INTERFACE_ID_WITH_PACKAGE_ID. Both field pairs contain the same value.

Use Canton's Error Description instead of Daml Exceptions

Canton has a standard model for describing errors which is based on the standard gRPC description. The use of standardized LAPI error responses "enable API clients to construct centralized common error handling logic. This common logic simplifies API client applications and eliminates the need for cumbersome custom error handling code."

In Canton 3.2 there are two error handling systems:

- The Daml level: Daml exceptions within the Daml code: user defined exception types are thrown (via the the throw keyword), and caught using try/catch.

- The Ledger API (LAPI) client level: Uses Canton's standard model mentioned above.

Daml exceptions are deprecated in Canton 3.3; please consider migrating away from them.

Canton 3.3 introduces the failWithStatus Daml method so user defined Daml errors can be directly created and passed to the ledger client. The Ledger API client then can inspect and handle errors raised by Daml code and Canton in the same fashion. This approach has several benefits for applications:

- It makes it easy to raise the same Daml error from different implementations of the same interface (e.g. the CN token standard APIs).

- It integrates cleanly into the LAPI client since Canton's error-ids are generated within the Daml code and can extend a subset of these ids.

- Canton will also map all internal exceptions into a FailureStatus error message so that the ledger client can handle them uniformly..

- Avoids the parsing needed to convert Daml User Exceptions into an informative client-side error response. This is simpler and more straightforward.

- Provides support for passing error specific metadata in a unified way back to clients.

An example will help to make this concrete. Consider the following Daml exception:

exception InsufficientFunds with

required : Int

provided : Int

where

message “Insufficient funds! Needed “ + show required + “ but only got “ + show provided

This would have been received by a ledger client as:

Status(

code = FAILED_PRECONDITION, // The Grpc Status code

message = "UNHANDLED_EXCEPTION(9,...): Interpretation error: Error: Unhandled Daml exception: App.Exceptions:InsufficientFunds@...{ required = 10000, provided = 7000}",

details = List(

ErrorInfoDetail(

// The canton error ID

errorCodeId = "UNHANDLED_EXCEPTION",

metadata = Map(

participant -> "...",

// The canton error category

category -> InvalidGivenCurrentSystemStateOther,

tid = "...",

definite_answer = false,

commands = ...

)

),

RequestInfo(

correlationId = "..."

),

ResourceInfo(

typ = "EXCEPTION_TYPE",

// The daml exception type name

name = "<pkgId>:App.Exceptions:InsufficientFunds"

),

ResourceInfo(

typ = "EXCEPTION_VALUE",

// The InsufficientFunds record, with “required” and “provided” fields

name = "<LF-Value>"

),

)

)

Now it will be received by the client as:

Status(

code = 9, // FAILED_PRECONDITION

message = "DAML_FAILURE(9, ...): UNHANDLED_EXCEPTION/App.Exceptions:InsufficientFunds: Insufficient funds! Needed 10000 but only got 7000",

details = List(

ErrorInfoDetail(

errorCodeId = "DAML_FAILURE",

metadata = Map(

"error_id" -> "UNHANDLED_EXCEPTION/App.Exceptions:InsufficientFunds"

"category" -> "InvalidGivenCurrentSystemStateOther",

... other canton error metadata ...

)),

...

)

)

It is also possible to raise errors directly by calling failWithStatus method from Daml code. The error details are encoded as the ErrorInfoDetail metadata. It includes an error_id of the form UNHANDLED_EXCEPTION/Module.Name:ExceptionName for legacy exceptions, and is fully user defined for errors raised from failWithStatus.

Ledger API now gives the DAML_FAILURE error instead of the UNHANDLED_EXCEPTION error when exceptions are thrown and not caught in the Daml code.

For these reasons, Daml Exceptions are deprecated in this release and the failWithStatus Daml method and FailureStatus error message are recommended going forward. Please migrate your code to using failWithStatus and away from the Daml exceptions before 3.4.

Time Boundary Functions

External signing introduces the need to be able to monitor time bounds (often to implement expiry) or measure durations (often between when a contract was created and an expiration time). These durations can be quite long, perhaps a day. This is accommodated with the introduction of time boundary functions in Daml.

This is used by the Canton Network Token Standard. Many of the choices in the Canton Network Token Standard are dependent on ledger time. Expressing these constraints using getTime limits the maximal delay between preparing a transaction and submitting it for execution to one minute. Canton 3.3 introduces new primitives for asserting constraints on ledger time, which remove that artificially tight one minute bound, and instead capture the actual dependency of the submission time and ledger time. These new primitives are used in the Amulet implementation.

The new primitives are:

- Ledger time bound checking predicates: isLedgerTimeLT, isLedgerTimeLE, isLedgerTimeGT and isLedgerTimeGE

- Ledger time deadline assertions: assertWithinDeadline and assertDeadlineExceeded

Cryptographic Primitives

The Canton 3.3 release also introduces Daml support for verifying cryptographic signatures. Thereby making it easier to build Daml workflows that bridge between Canton Network and other chains. These cryptographic primitives are useful for building bridges or wrapping tokens. This feature is in Alpha status so they may be updated based on user feedback.

- BytesHex support (for base 16 encoded byte strings): isHex, byteCount, packHexBytes and sliceHexBytes

- Bytes32Hex support: isBytes32Hex, minBytes32Hex and maxBytes32Hex

- UInt32Hex support: isUInt32Hex, minUInt32Hex and maxUInt32Hex

- UInt64Hex support: isUInt64Hex, minUInt64Hex and maxUInt64Hex

- UInt256Hex support: isUInt256Hex, minUInt256Hex and maxUInt256Hex

- Daml data type BytesHex support via type classes HasToHex and HasFromHex

- Cryptography primitives: keccak256 and secp256k1.

Canton Ledger API

This section provides additional context to supplement the Migration guide from version 3.2 to version 3.3.

JSON API v2

For clarity,we need to distinguish between two JSON API versions:

- JSON Ledger API v1: is in both Canton 3.2 and Canton 3.3. The v1 version is deprecated in this release and will be removed in Canton 3.4.

- JSON Ledger API v2: is introduced in this release and will be the supported JSON Ledger API version going forward.

This section is about JSON Ledger API v2.

Application developers need stable, easily accessible APIs to build on. Canton 3.3 takes a major step forward in stabilizing the developer-facing APIs of the Canton Participant Node by making all calls that are available in the gRPC Ledger API accessible via HTTP JSON v2 as well. It follows industry standards such as AsyncAPI (websocket) and OpenAPI (synchronous, request-response), which enables the use of the associated tooling (e.g., generate language bindings at the discretion of the developer). This allows developers to freely pick between gRPC or HTTP JSON, depending on what suits them best.

Please note that JSON API v2 does not support query-by-attribute capabilities currently offered by JSON API v1. These queries have proven problematic and are no longer supported. The LAPI pointwise query APIs can be used. For more general querying capabilities, werecommend using the Participant Query Store (PQS).

Some further details about JSON API v2 are:

- Http response status codes are based on the corresponding gRPC errors where applicable.

- /v2/users and /v2/parties now support paging.

- openapi.yaml has been updated to correctly represent Timestamps as strings in the JSON API schema.

- Fields that are mapped to Option, Seq or Map in gRPC are no longer required and can be omitted in the request. Their value defaults to an empty container.

- _recordId fields have been removed from Daml records in JSON API.

- default-close-delay has been removed from ws-config (websocket config) in http-service configuration. Close delay is no longer necessary.

- Following types are now encoded as strings:

- HashingSchemeVersion

- PackageStatus

- ParticipantPermission

- SigningAlgorithmSpec

- SignatureFormat

- TransactionShape

As mentioned, JSON Ledger API v1 is deprecated in this release and will be removed in Splice 0.5.0/Canton 3.4. So, applications need to migrate to JSON Ledger API v2 which is available in Splice 0.4.0/Canton 3.3. The migration details from Canton 3.2 to Canton 3.3 are available in the "HTTP JSON API Migration to V2 guide". Please note that including @daml/ledger will not work for V2 because it is for Canton JSON API V1.

Identifier Addressing by-package-name

In Canton 3.3, Smart Contract Upgrade supported two formats for specifying interface and template identifiers to the Ledger API. They are:

- package-name reference format which uses the package name as the root identifier, such as #<package-name>:<module>:<entity>. This was introduced with SCU.

- package-id reference format which uses the package-id that has the format <package-id>:<module>:<entity>.

The package-id reference format will not be supported in Splice 0.5.0. Applications must switch to using the package-name reference format for all requests submitted to the Ledger API (commands and queries).

Application_id and domain are Renamed

There are some cosmetic API changes that were delayed from the jump from Canton 2 to Canton 3. These naming changes are aggregated into this release.

The first is renaming the application_id in the Ledger API to user_id. The migration changes are described in Application ID to User ID rename. Any JSON API v1 calls will also have to make this change.

The second is in anticipation of multi-synchronizer applications where the term domain has changed to synchronizer. This occurs in several places: console commands, gRPC messages, error codes, etc. The migration changes are described in Domain to Synchronizer rename. Any JSON API v1 calls will also have to make this change.

offset is now an Integer

In Canton 3.2, the ledger offset is a string value that is usually converted to a numeric value. In Canton 3.3, the offset is now an int64 which allows trivial and direct comparisons. Negative values are considered to be invalid. A zero value denotes the participant’s begin offset and the first valid offset is 1. Logged offset values will not be in the current hexadecimal format but instead be a decimal Any LAPI or JSON API v1 calls will have to make this change. The String representation is replaced by Long for the Java bindings.

event_id is changed to offset and node_id

The events that are published by the Participant Node's ledger API have changed. In Canton 3.2, event IDs are strings constructed through concatenation of a transaction ID and node ID and they look something like:

#122051327f59fd759c0b16a07f4cd7146960fb7ada6bfcd56e3144f30a503f5e0010:0

The node-ids are participant node specific and are not interchangeable.

In Canton 3.3, the event_id is replaced with a pair (offset, node_id) of integers for all events, recording the origin and position of the events respectively. The current event-id is replaced with the node-id for event-bearing messages such as CreatedEvent, ArchivedEvent, ExercisedEvent. This approach reduces internal and client storage use without any loss in functionality. The lookups by event ID need to be replaced by lookups by offset. The semantics are that the transaction tree structure is recoverable from the node-ids as node-ids within a transaction carry the same information as old event-ids (discussed in Universal Event Streams below). This is accomplished by:

- Replacing event-id in all event-related proto messages with node-id.

- Renaming the ledger api ByEventId queries to ByOffset.

- The GetTransactionByEventId and GetTransactionTreeByEventId queries are removed and replaced by GetUpdateById. The GetTransactionByOffset and GetTransactionTreeByOffset queries are replaced by GetUpdateByOffset.

- child_event_ids fields have been removed from the ExercisedEvent.

- last_descendant_node_id field has been added in the ExercisedEvent. This field specifies the upper boundary for the node ids of the events that are consequences of this exercised event.

- The root_event_ids have been replaced with root_node_ids in the TransactionTree.

- The GetTransactionByEventIdRequest has been replaced by the GetTransactionByOffsetRequest message.

- The GetTransactionByOffsetRequest contains the offset of the transaction or the transaction tree to be fetched and the requesting parties.

The migration changes are described in Event ID to offset and node_id. Any JSON API v1 calls will also have to make this change.

Interactive Submission

The interactive submission service and external signing authorization logic are now always enabled. The following configuration fields must be removed from the Canton Participant Node's configuration:

- ledger-api.interactive-submission-service.enabled

- parameters.enable-external-authorization

The requirement for external signing to pass in all referenced contracts as explicitly disclosed contracts has been removed.

The hashing algorithm for external signing has been updated from V1 to V2. Canton 3.3 will only support hashing algorithm V2, which is not backwards compatible with V1 for several reasons:

- There is a new interfaceId field in the Fetch node of the transaction that is now part of the hash.

- The hashing scheme version (now being V2) is now part of the hash. The hash provided as part of the PrepareSubmissionResponse is updated to the new algorithm as well.

Support for V1 has been dropped and will not be supported in Canton 3.3 onward. Refer to the hashing algorithm documentation for the updated version.

This is important for applications that re-compute the V1 hash client-side. Such applications must update their implementation to V2 in order to use the interactive submission service on Canton 3.3.

Also, the following renamings have happened to better represent their contents:

- The ProcessedDisclosedContract message in the Metadata message of the interactive_submission_service.proto file has been renamed to InputContract.

- The field disclosed_events in the same Metadata message has been renamed to input_contracts.

- The field submission_time in the InputContract message has been renamed to preparation_time.

Universal Event Streams

Currently, a Ledger API (LAPI) client can subscribe to ledger events and receive either a flat transaction stream or a transaction tree stream where neither provides a complete view. Subscribing to topology events is not available either. Universal Event Streams is a new feature that overcomes these challenges while providing additional filtering and formatting capabilities.

The Universal Event Streams feature has transaction filters and streams with the following capabilities:

- Combining of flat and tree transaction capabilities.

- Explicit list of event types to be included in the stream.

- Extending the list of event properties that can be turned on and off.

- The same formatting specification is used in the point-wise queries (GetUpdateByOffset, GetUpdateById) and the SubmitAndWaitForTransaction requests.

It combines the topology and package information into a single continuous stream of updates ordered by their offsets. Future event types will be added in a backwards compatible manner.

The structural representation of Daml transaction trees no longer exposes the root event IDs and the children for exercised events. It now exposes the last descendant node ID for each node. This new representation changes transaction trees to allow:

- Partial reconstruction of filtered transaction trees when some intermediate nodes are missing. The is-descendant relationship between the nodes is preserved.

- More compact representation

- Guarantees a bounded storage even for a very large number of child nodes.

The representation can be considered a variant of the DFUDS (Depth-First Unary Degree Sequence) or a Nested Set model representation.

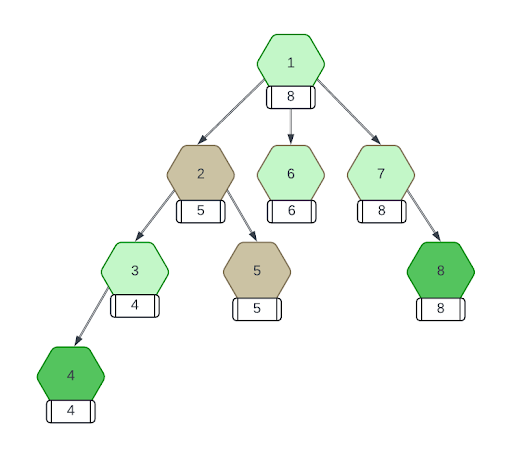

Furthermore, the event nodes are guaranteed to be output in execution order to simplify processing them in that order. If you do need to traverse the actual tree, encoding that traversal as a recursive function with an additional lastDescendandNodeId argument for when to stop the traversal of the current node will work well. The figures below illustrate the difference.

Canton 3.2: store all children of a node and root nodes like this:

Canton 3.3: store the highest node id of node’s descendants:

The Java bindings have an example helper class that can be leveraged to reconstruct the transaction tree. There is also a helper function getRootNodeIds(). The node IDs or root nodes (i.e. the nodes that do not have any ancestors) are important for this computation. A node is a root node if it has no ancestors. There is no guarantee that the root node was also a root in the original transaction (i.e. before filtering out events from the original transaction). In the case that the transaction is returned in AcsDelta shape all the events returned will trivially be root nodes.

For those changes that are required for Splice 0.4.0 see the heading “Required Changes in 3.3” in the section Universal Event Streams to be able to recover the original behavior. Following is a summary of the changes that were made:

- The GetActiveContractsRequest message was extended with the event_format field of EventFormat type. The event_format should not be set simultaneously with the filter or verbose field.

- The GetUpdatesRequest message was extended with the update_format field of UpdateFormat type.

- For the GetUpdateTrees method it must be unset.

- For the GetUpdates method the update_format should not be set simultaneously with the filter or verbose field.

- The GetTransactionByOffsetRequest and the GetTransactionByIdRequest were extended with the transaction_format field of the TransactionFormat type.

- For the GetTransactionTreeByOffset or the GetTransactionTreeById method it must be unset.

- For the GetTransactionByOffset or the GetTransactionById method it should not be set simultaneously with the requesting_parties field.

- The GetEventsByContractIdRequest was extended with the event_format field of the EventFormat type. It should not be set simultaneously with the requesting_parties field.

- The UpdateFormat message was added. It specifies which updates to include in the stream and how to render them. All of its fields are optional and define how transactions, reassignments, and topology events will be formatted. If a field is not set the respective updates will not be transmitted.

- The TransactionFormat message was added. It specifies which events to include in the transactions and what data to compute and include for them.

- The TransactionShape enum defines the event shape for Transactions and can have two different flavors AcsDelta and LedgerEffects.

- AcsDelta: The transaction shape that is sufficient to maintain an accurate ACS view. This translates to create and archive events. The field witness_parties in events are populated as stakeholders, transaction filters will apply accordingly.

- LedgerEffects: The transaction shape that allows maintaining an ACS and also conveys detailed information about all exercises. This translates to create, consuming exercise and non-consuming exercise. The field witness_parties in events are populated as cumulative informees, transaction filters will apply accordingly.

- The EventFormat message was added. It defines both which events should be included and what data should be computed and included for them.

- The filters_by_party field define the filters for specific parties on the participant. Each key must be a valid PartyIdString. The interpretation of the filter depends on the transaction shape being filtered:

- For ledger-effects create and exercise events are returned, for which the witnesses include at least one of the listed parties and match the per-party filter.

- For transaction and active-contract-set streams create and archive events are returned for all contracts whose stakeholders include at least one of the listed parties and match the per-party filter.

- The filters_for_any_party define the filters that apply to all the parties existing on the participant.

- The verbose flag triggers the ledger to include labels for record fields.

- The filters_by_party field define the filters for specific parties on the participant. Each key must be a valid PartyIdString. The interpretation of the filter depends on the transaction shape being filtered:

- The TopologyFormat message was added. It specifies which topology transactions to include in the output and how to render them. It currently contains only the ParticipantAuthorizationTopologyFormat field. If it is unset no topology events will be emitted in the output stream.

- The added ParticipantAuthorizationTopologyFormat message specifies which participant authorization topology transactions to include and how to render them. In particular, it contains the list of parties for which the topology transactions should be transmitted. If the list is empty then the topology transactions for all the parties will be streamed.

- The ArchivedEvent and the ExercisedEvent messages were extended with the implemented_interfaces field. It holds the interfaces implemented by the target template that have been matched from the interface filter query. They are populated only in case interface filters with include_interface_view are set and the event is consumed for exercised events.

- The Event message was extended to include the ExercisedEvent that can also be present in the TreeEvent. When the transaction shape requested is AcsDelta then only CreatedEvents and ArchivedEvents are returned, while when the LedgerEffects shape is requested only CreatedEvents and ExercisedEvents are returned.

The Java bindings and the JSON API data structures have changed accordingly to include the changes described above.

For the detailed way on how to migrate to the new Ledger API please see Ledger API migration guide

To work in Splice 0.5.0, further application updates are needed because the deprecated APIs will be removed. The heading “Changes Required before the Next Major Release” in the section Universal Event Streams has the migration details.

Ledger API Interface Query Upgrading

Streaming and point-wise queries support for smart contract upgrading:

- Dynamic upgrading of interface filters: on a query for interface iface, the Ledger API will deliver events for all templates that can be upgraded to a template version that implements iface. The interface filter resolution is dynamic throughout a stream's lifetime: it is re-evaluated on each DAR upload.

Note: There is no redaction of history: a DAR upload during an ongoing stream does not affect the already scanned ledger for the respective stream. If clients are interested in re-reading the history in light of the upgrades introduced by a DAR upload, the relevant portion of the ACS view of the client should be rebuilt by re-subscribing to the ACS stream and continuing from there with an update subscription for the interesting interface filter. - Dynamic upgrading of interface views: rendering of interface view values is done using the latest (highest semver) package version of the template implementing an interface instance. Packages vetted with validUntil are excluded from the selection.

Note: The selected version to be rendered does not change once stream is started, even if the vetting state evolves.

Canton Network Quick Start

The Quick Start is designed to help teams become familiar with Canton Network Global Synchronizer (CN GS) application development by providing scaffolding to kickstart development. It accelerates application development by:

- Providing an environment with all the common tooling and instructions to develop a Global Synchronizer application.

- Hosting a LocalNet that runs on a developers laptop to speed up the develop, test, and debug cycle. Then easily switch to DevNet to test on a Global Synchronizer.

- Demonstrating a full stack, canonical Global Synchronizer application that can be experimented with.

The intent is that you clone the repository and incrementally update the application to match your business operations.

To run the Quick Start you need some binaries from Artifactory. Request Artifactory access by clicking here and we will get right back to you.

The terms and conditions for the binaries can be found here. The is licensed under the BSD Zero Clause License.

The Canton Platform

Automatic Node Initialization and Configuration

A node can be initialized with an external, pre-generated root namespace key while all other keys are automatically created.

If you have been using manual identity initialization of a node, i.e., using auto-init = false, you will be impacted by the following change in automatic node initialization.

The node initialization has been modified to better support root namespace keys and using static identities for our documentation. Mainly, while before, we had the init.auto-init flag, we now support a bit more versatile configurations.

The config structure looks like this now:

canton.participants.participant.init = {

identity = {

type = auto

identifier = {

type = config // random // explicit(name)

}

}

generate-intermediate-key = false

generate-topology-transactions-and-keys = true

}

A manual identity can be specified via the gRPC API if the configuration is set to manual.

identity = {

type = manual

}

Alternatively, the identity can be defined in the configuration file, which is equivalent to an API based initialization using the external config:

identity = {

type = external

identifier = name

namespace = "optional namespace"

delegations = ["namespace delegation files"]

}

The old behaviour of auto-init = false (or init.identity = null) can be recovered using

canton.participants.participant1.init = {

generate-topology-transactions-and-keys = false

identity.type = manual

}

This means that auto-init is now split into two parts: generating the identity and generating the subsequent topology transactions.

The console command node.topology.init_id has been changed slightly too: It now supports additional parameters delegations and delegationFiles. These can be used to specify the delegations that are necessary to control the identity of the node, which means that the init_id call combined with identity.type = manual is equivalent to the identity.type = external in the config, except that one is declarative via the config, the other is interactive via the console. In addition, on the API level, the InitId request now expects the unique_identifier as its components, identifier and namespace.

The init_id repair macro has been renamed to init_id_from_uid. init_id still exists but takes the identifier as a string and namespace optionally instead.

Extensions to the Topology Transaction Support

Topology transaction messages had the following changes::

- The ParticipantAuthorizationAdded message was added to distinguish the first time the participant acquires permission to a party from the change of that permission (represented by ParticipantAuthorizationChanged).

- The TopologyEvent message was extended to include the ParticipantAuthorizationAdded.

The JSON API and Java bindings have changed accordingly.

Deduplication Offset

In Canton 3.2, only absolute offsets were allowed to define the deduplication periods by offset. Now, participant-begin offsets are also supported for defining deduplication periods. The participant-begin deduplication period (defined as zero value in API) can only be used if the participant was not pruned yet. Otherwise, as in the other cases where the deduplication offset is earlier than the last pruned offset, an error informing you that the deduplication period starts too early will be returned.

ACS Export/Import

Canton 3.3 introduces a new approach to export and import of ACS snapshots, which is an improvement for any future Synchronizer Upgrades with Downtime procedure. It also prepares Canton for online protocol upgrades.

The ACS export and import now use an ACS snapshot containing Ledger API active contracts, as opposed to the Canton internal active contracts. Further, the ACS export now requires a ledger offset for taking the ACS snapshot, instead of an optional timestamp. The new ACS export does not feature an offboarding flag anymore; offboarding is not ready for production use and will be addressed in a future release.

For party replication, we want to take (export) the ACS snapshot at the ledger offset when the topology transaction results in a (to be replicated) party being added (onboarded) on a participant. The new command find_party_max_activation_offset allows you to find such an offset. Analogously, the new find_party_max_deactivation_offset command allows you to find the ledger offset when a party is removed (offboarded) from a participant.

The Canton 3.3 release contains both variants: export_acs_old/import_acs_old and export_acs/import_acs. A subsequent release is only going to contain the Ledger API active contract export_acs/import_acs commands (and their protobuf implementation).

KMS Drivers

KMS drivers are now supported in the Canton community edition to allow custom integrations.

Topology-Aware Package Selection

Composing Canton Network applications is best done via interfaces to minimize the required coordination for rolling out smart contract upgrades of dependencies. In Canton 3.2, this did not work well, as the version of Daml packages was chosen using purely local information about the submitting validator node instead of considering the packages vetted by the counter-participants involved in the transaction. Canton 3.3 introduces support for vetting-state-aware package selection to lower the required coordination for rolling out smart contract upgrades (in many cases to zero). This new feature is called Topology-Aware Package Selection. It uses the topology state of connected synchronizers to optimally select packages for transactions, ensuring they pass vetting checks on counter-participants.

Topology-aware package selection in command submission is enabled by default. To disable, toggle participant.ledger-api.topology-aware-package-selection.enabled = false. A new query endpoint for supporting topology-aware package selection in command submission construction is added to the Ledger API:

- gRPC: com.daml.ledger.api.v2.interactive.InteractiveSubmissionService.GetPreferredPackageVersion

- JSON: /v2/interactive-submission/preferred-package-version

Renamed Canton Console Commands

Canton console commands updates reflect the prior mentioned changes, such as:

- Renaming of the current {export|import}_acs to the {export|import}_acs_old console commands.

- Changed protobuf service and message definitions. Renaming of the {Export|Import}Acs rpc together with their {Export|Import}Acs{Request|Response} messages to the {Export|Import}AcsOld rpc together with their {Export|Import}AcsOld{Request|Response} messages in the participant_repair_service.proto

- Deprecation of {export|import}_acs_old console commands, its implementation and protobuf representation.

- New endpoint location for the new export_acs. The new export_acs and its protobuf implementation are no longer part of the participant repair administration; but now are located in the participant parties' administration: party_management_service.proto. Consequently, the export_acs endpoint is accessible without requiring a set repair flag.

- Same endpoint location for the new import_acs. import_acs and its protobuf implementation are still part of the participant repair administration. Thus, using it still requires a set repair flag.

- No backwards compatibility for ACS snapshots. An ACS snapshot that has been exported with 3.2 needs to be imported with import_acs_old.

- Renamed the current ActiveContact to ActiveContactOld. And deprecation of ActiveContactOld, and in particular its method to ActiveContactOld#fromFile

- Renamed the current import_acs_from_file repair macro to import_acs_old_from_file. And deprecation of import_acs_old_from_file.

Signing Key Usage

The console commands to generate (generate_signing_key) and register signing keys (register_kms_signing_key) now require a signing key usage parameter usage: SigningKeyUsage to specify the intended context in which a signing key is used. This parameter is enforced and ensures that signing keys are only employed for their designated purposes. The supported values are:

- Namespace: Used for the root and intermediate namespace keys that can sign topology transactions for that namespace.

- SequencerAuthentication: Used for signing keys that authenticate network members towards the sequencer.

- Protocol: Used for all signing operations that occur as part of the core protocol. This includes, for example, signing view trees, messages, or data involved in protocol execution.

Removed identifier delegation topology request and `IdentityDelegation` key usage

The IdentifierDelegation topology request type and its associated signing key usage, IdentityDelegation, have been removed because it is no longer used. This usage was previously reserved for delegating identity-related capabilities but is no longer supported. Any existing keys using the IdentityDelegation usage will have it ignored during deserialization.

All console commands and data types on the admin API related to identifier delegations have been removed.

NamespaceDelegation can be restricted to a specific set of topology mappings

It is now possible to have multiple intermediate namespace keys with each one restricted to only authorize a specific set of topology transactions.

NamespaceDelegation.is_root_delegation is deprecated and replaced with the oneof NamespaceDelegation.restriction. See the protobuf documentation for more details. Existing NamespaceDelegation protobuf values can still be read and the hash of existing topology transactions is also preserved. New NamespaceDelegations will only make use of the restriction oneof. transaction is also preserved.

-

- The equivalent of is_root_delegation=true is restriction=CanSignAllMappings.

- The equivalent of is_root_delegation=false is restriction=CanSignAllButNamespaceDelegations

Generalized InvalidGivenCurrentSystemStateSeekAfterEnd

The existing error category InvalidGivenCurrentSystemStateSeekAfterEnd has been generalized. This error category now describes a failure due to requesting a resource using a parameter value that falls beyond the current upper bound (or end) defined by the system's state, for example, a request that asks for data at a ledger offset which is past the current ledger's end.

With this change, the error category InvalidGivenCurrentSystemStateSeekAfterEnd has also been marked as retryable. It makes sense to retry a failed request assuming the system has progressed in the meantime, and thus a previously requested ledger offset has become valid.

Topology Management Breaking Changes

To avoid confusion some error codes and commands have been renamed or removed:

- Topology related error codes have been renamed to contain the prefix TOPOLOGY_, for example: SECRET_KEY_NOT_IN_STORE has become TOPOLOGY_SECRET_KEY_NOT_IN_STORE.

- Renamed the filter_store parameter in TopologyManagerReadService to store because it doesn't act anymore as a string filter like filter_party.

- Console commands changed the parameter filterStore: String to store: TopologyStoreId. Additionally, there are implicit conversions in ConsoleEnvironment to convert SynchronizerId to TopologyStoreId and variants thereof (Option, Set, ...). With these implicit conversions, whenever a TopologyStoreId is expected, users can pass just the synchronizer ID and it will be automatically converted into the correct TopologyStoreId.Synchronizer.

- TopologyManagerReadService.ExportTopologySnapshot and TopologyManagerWriteService.ImportTopologySnapshot are now streaming services for exporting and importing a topology snapshot respectively.

- Changed the signedBy parameter of the console command topology.party_to_participant_mapping.propose from Optional to Seq.

DAR and Package Services

DarService and Package service on the admin-api have been cleaned up:

- Before, a DAR was referred through a hash over the zip file. Now, the DAR ID is the main package ID.

- Renamed all hash arguments to darId.

- Added name and version of DAR and package entries to the admin API commands.

- Renamed the field source description to description and stored it with the DAR, not the packages.

- Renamed the command list_contents to get_content to disambiguate with list (both for packages and DARs).

- Added a new command packages.list_references to support listing which DARs are referencing a particular package.

- Addressing a DAR on the admin api is simplified: Instead of the DAR ID concept, we directly use the main package-id, which is synonymous.

- Renamed all darId arguments to mainPackageId

Faster Recovery by Failing Fast

Being able to fail fast can lead to faster recovery. Following this principle, a new storage parameter is introduced: storage.parameters.failed-to-fatal-delay. This parameter, which defaults to 5 minutes, defines the delay after which a database storage that is continuously in a Failed state escalates to Fatal. The sequencer liveness health is now changed to use its storage as a fatal dependency, which means that if the storage transitions to Fatal, the sequencer liveness health transitions irrevocably to NOT_SERVING. This allows a monitoring system to detect the situation and restart the node. NOTE Currently, this parameter is only used by the DbStorageSingle component, which is only used by the sequencer.

Session Signing Keys

Session signing keys for protocol message signing and verification were added. These are software-based, temporary keys authorized by a long-term key via an additional signature and are valid for a short period. Session keys are designed to be used with a KMS/HSM-based provider to reduce the number of signing operations and, consequently, lower the latency and cost associated with external key management services.

Session signing keys can be enabled and their validity period configured through the Canton configuration using <node>.parameters.session_signing_keys. By default they are currently disabled.

Memory Check during Node Startup

A memory check has been introduced when starting the node. This check compares the memory allocated to the container with the -Xmx JVM option. The goal is to ensure that the container has sufficient memory to run the application. To configure the memory check behavior, add one of the following to your configuration:

canton.parameters.startup-memory-check-config.reporting-level = warn // Default behavior: Logs a warning.

canton.parameters.startup-memory-check-config.reporting-level = crash // Terminates the node if the check fails.

canton.parameters.startup-memory-check-config.reporting-level = ignore // Skips the memory check entirely.

Traffic Fees and Rate Limiting

Previously not all messages being sequenced had a traffic cost associated which could lead to a denial of service attack using "free" traffic on a synchronizer. Furthermore sequencer acknowledgements do not lead to traffic cost and are also rate limited to avoid a denial of service.

A base event cost can now be added to every sequenced submission. The amount is controlled by a new optional field in the TrafficControlParameters called base_event_cost. If not set, the base event cost is 0.

Sequencer acknowledgements do not incur a traffic fee, in order to rate limit acknowledgements the sequencers will now conflate acknowledgements coming from a participant within a time window. This means that if 2 or more acknowledgements from a given member get submitted during the window, only the first will be sequenced and the others will be discarded until the window has elapsed. The conflate time window can be configured with the key acknowledgements-conflate-window in the sequencer configuration, which defaults to 45 seconds.

Example: sequencers.sequencer1.acknowledgements-conflate-window = "1 minute"

Refactored Synchronizer Connectivity Service

The synchronizer connectivity service was refactored to have endpoints with limited responsibilities:

- Added ReconnectSynchronizer to be able to reconnect to a registered synchronizer

- Added DisconnectAllSynchronizers to disconnect from all connected synchronizers

- Changed RegisterSynchronizer does not allow to fully connect to a synchronizer anymore (only registration and potentially handshake): if you want to connect to a synchronizer, use the other endpoint

- Changed ConnectSynchronizer takes a synchronizer config so that it can be used to connect to a synchronizer for the first time

- Renamed ListConfiguredDomains to ListRegisteredSynchronizers for consistency (and in general: configure(d) -> register(ed))

Offline Root Namespace Initialization Scripts

An improvement to the offline root namespace key procedure by using scripts to initialize a participant node's identity using an offline root namespace key. They were added to the release artifact under scripts/offline-root-key. An example usage with locally generated keys is available at examples/10-offline-root-namespace-init.

Participant Query Store

The Participant Query Store (PQS) is compatible with both Canton 2 and Canton 3. The only changes needed are those due to type changes in the Ledger API.

In Canton 3, the Ledger API user can be configured for Universal Reader access (on the participant - via authorizations) so a PQS request for * Parties will include all parties on that participant. This will simplify access for new Parties as they emerge. This can be useful when a PQS belongs to the Participant's organization and is intended to have full (read) access to all party's located there. Configuration argument: `--pipeline-filter-parties=*`

The detailed LAPI changes are as follows:

- Users of the following columns from the SQL functions in general (creates, archives, exercises) must use the new tuple type (offset::bigint, node_id::int):

- event_id, create_event_id, archive_event_id, exercise_event_id

- These columns interpret the offset as an integer:

- offset, created_at_offset, archived_at_offset, exercised_at_offset

- offset is an integer in these functions:

- validate_offset_exists, validate_pruning_offset, validate_reset_offset, reset_to_offset, prune_to_offset, summary_active, creates (optional parameters), archives (optional parameters), exercises (optional parameters)

- PQS needs to be instructed to use the "Canton3.3" Ledger API using the command line:

- Container: $ docker run -it --workdir /daml3.3 digitalasset-docker.jfrog.io/participant-query-store:x.x.x --version

- JAR: use the corresponding .jar daml3.3/scribe.jar

- Users making use of event redaction feature must use the use the new event_id type (also returned by the Read API) comprising of a tuple of integers (offset, node_id): e.g. SELECT redact_exercise((100, 1), '<redaction_id>');

We recommend displaying the event identifying information as <offset>:<node_id>.

This simple Java client is an example of the usage.

Other Changes

- The argument to SubmitAndWaitForTransaction call has been changed from SubmitAndWaitRequest to SubmitAndWaitForTransactionRequest. Apart from the Commands, this message contains the TransactionFormat that defines the attributes of the transaction to be returned. For more details please see the migration guide.

- Ledger API now gives the DAML_FAILURE error instead of the UNHANDLED_EXCEPTION error when exceptions are thrown and not caught in the Daml code.

- The ACS export now requires a ledger offset for taking the ACS snapshot, instead of an optional timestamp. In requesting an ACS, the offset needs to be provided. It should be obtained through a preceding call to getLedgerEnd. The new ACS export does not feature an offboarding flag anymore; offboarding is not ready for production use and will be addressed in a future release.

- Ledger metering has been removed. This involved:

- deleting MeteringReportService in the Ledger API

- deleting /v2/metering endpoint in the JSON API

- deleting the console ledger_api.metering.get_report command

- The package vetting ledger-effective-time boundaries changed to validFrom being inclusive and validUntil being exclusive whereas previously validFrom was exclusive and validUntil was inclusive.

- Changed the endpoint PackageService.UploadDar to accept a list of dars that can be uploaded and vetted together. The same change is also represented in the ParticipantAdminCommands.Package.UploadDar.

- Renamed configuration parameter session-key-cache-config to session-encryption-key-cache.

- sequencer_authentication_service returns gRPC errors instead of a dedicated failure message with status OK.

- display_name is no longer a part of Party data, so it is removed from party allocation and update requests in the Ledger API and Daml script

- PartyNameManagement service was removed from the Admin API

- Renamed request/response protobuf messages of the inspection, pruning, resource management services from Endpoint.Request to EndpointRequest and respectively for the response types.

- The Daml Compiler flags bad-{exceptions,interface-instances} and warn-large-tuples no longer exist

- Typescript code generation no longer emits code for utility packages.

- Added SequencerConnectionAdministration to remote mediator instances, accessible e.g. via mymediator.sequencer_connection.get

- Changed the console User.isActive to isDeactivated to align with the Ledger API

- Added metric daml.mediator.approved-requests.total to count the number of approved confirmation requests

- Removed parameters sequencer.writer.event-write-batch-max-duration and sequencer.writer.payload-write-batch-max-duration as these are not used anymore.

- Remote console sequencer connection config canton.remote-sequencers.<sequencer>.public-api now uses the same TLS option for custom trust store as admin-api and ledger-api sections:

- new: tls.trust-collection-file = <existing-file> instead of undocumented old: custom-trust-certificates.pem-file

- new: tls.enabled = true to use system's default trust store for all APIs

- Authorization service configuration of the Ledger API and admin api is validated. No two services can define the same target scope or audience.

- Changes to defaults in ResourceLimits. The fields max_inflight_validation_requests and max_submission_rate are now declared as optional uint32, which also means that absent values are not encoded anymore as negative values, but as absent values. Negative values will result in a parsing error and a rejected request.

- Moved the canton.monitoring.log-query-cost option to canton.monitoring.logging.query-cost

- New sequencer connection validation mode SEQUENCER_CONNECTION_VALIDATON_THRESHOLD_ACTIVE behaves like SEQUENCER_CONNECTION_VALIDATON_ACTIVE except that it fails when the threshold of sequencers is not reached. In Canton 3.2, SEQUENCER_CONNECTION_VALIDATON_THRESHOLD_ACTIVE was called STRICT_ACTIVE.

- Fixed slow sequencer snapshot query on the aggregate submission tables in the case when sequencer onboarding state is requested much later and there's more data accumulated in the table.