This blog post previews the upcoming Splice 0.4.0 release notes so you can plan any application changes and have time to answer any questions.

Introduction

Application Changes Needed for Splice 0.4.0

Updates Needed before Splice 0.5.0

Introduction

This blog post previews the upcoming Splice 0.4.0 release notes. It highlights the new features in Canton/Daml 3.3 that focus on easing application development, testing, upgrading, and supporting the long-term stability of Canton APIs. These new features may impact Canton Network applications and associated backends. So, we are giving you an early preview prior to the release so you can plan any application changes and have time to answer any questions. Application migration details are provided for guidance. The complete Daml 3.3 Release Notes will be published later to this blog site.

There are two types of application updates that are discussed below. The first changes are needed to run on a network that has migrated to Splice 0.4.0. The second set are for deprecated features that are backwards compatible in Splice 0.4.0 but the backwards compatibility will be removed in Splice 0.5.0.

PLEASE NOTE: Applications that use the Validator APIs and Scan APIs are not affected (see validator node network diagram). Applications that integrate directly with the Canton participant node and its Ledger API should review this preview to understand any changes they may need to make.

Application Changes Needed for Splice 0.4.0

Canton Ledger API

The majority of application updates are related to the Canton's Ledger API. This section provides additional context to supplement the Migration guide from version 3.2 to version 3.3.

For clarity, this document distinguishes between two JSON API versions:

- JSON Ledger API v1: is in both Daml 3.2 and Daml 3.3. The v1 version is deprecated in this release and will be removed in Daml 3.4.

- JSON Ledger API v2: is introduced in this release and will be the supported JSON Ledger API version going forward.

More details are below in the section Canton JSON Ledger API v1 is Deprecated by Canton JSON API v2.

offset is now an Integer

In Daml 3.2, the ledger offset is a string value that is usually converted to a numeric value. In Daml 3, the offset is now an int64 which allows trivial and direct comparisons. Negative values are considered to be invalid. A zero value denotes the participant’s begin offset and the first valid offset is 1. Logged offset values will not be in the current hexadecimal format but instead be a decimal Any LAPI or JSON API v1 calls will have to make this change. The String representation is replaced by Long for the Java bindings.

event_id is changed to offset and node_id

The events that are published by the participant node's ledger API have changed. In Daml 3.2, event IDs are strings constructed through concatenation of a transaction ID and node ID and they look something like:

#122051327f59fd759c0b16a07f4cd7146960fb7ada6bfcd56e3144f30a503f5e0010:0

The node-ids are participant node specific and are not interchangeable.

In Daml 3.3, the event_id is replaced with a pair (offset, node_id) of integers for all events, recording the origin and position of the events respectively. The current event-id is replaced with the node-id for event-bearing messages such as CreatedEvent, ArchivedEvent, ExercisedEvent. This approach reduces internal and client storage use without any loss in functionality. The lookups by event ID need to be replaced by lookups by offset. The semantics are that the transaction tree structure is recoverable from the node-ids as node-ids within a transaction carry the same information as old event-ids (discussed in Universal Event Streams)..

This is accomplished by:

- Replacing event-id in all event-related proto messages with node-id.

- Renaming the ledger API ByEventId queries to ByOffset.

The GetTransactionByEventId and GetTransactionTreeByEventId queries are removed and replaced by GetUpdateById. The GetTransactionByOffset and GetTransactionTreeByOffset queries are replaced by GetUpdateByOffset.

The migration changes are described in Event ID to offset and node_id. Any JSON API v1 calls will also have to make this change.

Universal Event Streams

Currently, a Ledger API (LAPI) client can subscribe to ledger events and receive either a flat transaction stream or a transaction tree stream where neither provides a complete view. Subscribing to topology events is not available either. Universal Event Streams is a new feature that overcomes these challenges while providing additional filtering and formatting capabilities.

The Universal Event Streams feature has transaction filters and streams with the following capabilities:

- Merging of flat and tree transaction capabilities.

- Explicit list of event types to be included in the stream.

- Extending the list of event properties that can be turned on and off.

- The same formatting specification is used in the point-wise queries (GetUpdateByOffset, GetUpdateById) and the SubmitAndWaitForTransaction requests.

It combines the topology and package information into a single continuous stream of updates ordered by their offsets. Future event types will be added in a backwards compatible manner.

The structural representation of daml transaction trees no longer exposes the root event IDs and the children for exercised events. It now exposes the last descendant node ID for each node. As a result building a transaction tree is different now. This new representation allows:

- Representing filtered transaction trees. They can be partially reconstructed when some intermediate nodes are missing. The is-descendant relationship between the nodes is preserved.

- It is a more compact representation and it guarantees a bounded storage even for a very large number of child nodes.

The representation can be considered a variant of the DFUDS (Depth-First Unary Degree Sequence) or a Nested Set model representation.

Furthermore, the event nodes are guaranteed to be output in Daml execution order to simplify processing them in that order. If you do need to traverse the actual tree, then encoding that traversal as a recursive function with an additional lastDescendandNodeId argument for when to stop the traversal of the current node will work well. The figures below illustrate the difference.

Daml 3.2: store all children of a node and root nodes like this:

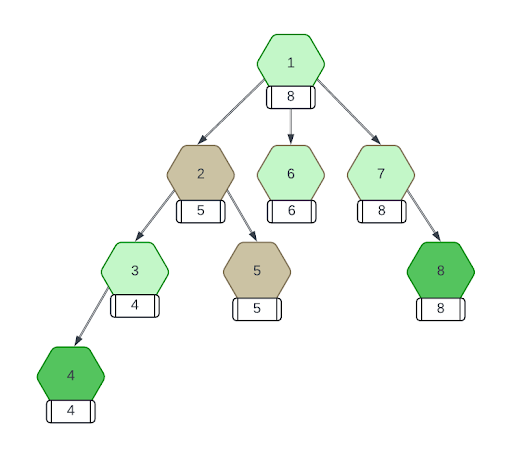

Daml 3.3: store the highest node id of node’s descendants:

The Java bindings have an exmaple helper class that can be leveraged to reconstruct the transaction tree. There is also a helper function getRootNodeIds(). The node IDs or root nodes (i.e. the nodes that do not have any ancestors) are important for this computation. A node is a root node if there are not any ancestors of it. There is no guarantee that the root node was also a root in the original transaction (i.e. before filtering out events from the original transaction). In the case that the transaction is returned in AcsDelta shape all the events returned will trivially be root nodes.

For those changes that are required for Splice 0.4.0 see the heading “Required Changes in 3.3” in the section Universal Event Streams.

Application_id and domain are Renamed

There are some cosmetic API changes that were delayed from the jump from Daml 2 to Daml 3. These naming changes are aggregated into this release.

The first is renaming the application_id in the Ledger API to user_id. The migration changes are described in Application ID to User ID rename. Any JSON API v1 calls will also have to make this change.

The second is in anticipation of multi-synchronizer applications where the term domain has changed to synchronizer. The migration changes are described in Domain to Synchronizer rename. Any JSON API v1 calls will also have to make this change.

Interactive Submission

The interactive submission service and external signing authorization logic are now always enabled. The following configuration fields must be removed from the Canton participant node's configuration:

- ledger-api.interactive-submission-service.enabled

- parameters.enable-external-authorization

The hashing algorithm for external signing has been updated from V1 to V2. Daml 3.3 will only support hashing algorithm V2 which is not backwards compatible with V1 for several reasons:

- There is a new interfaceId field in the Fetch node of the transaction that is now part of the hash.

- The hashing scheme version (now being V2) is now part of the hash. The hash provided as part of the PrepareSubmissionResponse is updated to the new algorithm as well.

Support for V1 has been dropped and will not be supported in Canton 3.3 onward. Refer to the hashing algorithm documentation for the updated version.

This is important for applications that re-compute the V1 hash client-side. Such applications must update their implementation to V2 in order to use the interactive submission service on Canton 3.3.

Also, the following renamings have happened to better represent their contents:

- The ProcessedDisclosedContract message in the Metadata message of the interactive_submission_service.proto file has been renamed to InputContract.

- The field disclosed_events in the same Metadata message has been renamed to input_contracts.

- The field submission_time in the InputContract message has been renamed to preparation_time.

Ledger API Interface Query Upgrading

Streaming and point-wise queries support for the Smart Contract Upgrade feature (discussed below) have changed.

Streaming and point-wise queries support for smart contract upgrading:

- Dynamic upgrading of interface filters: on a query for interface iface, the Ledger API will deliver events for all templates that can be upgraded to a template version that implements iface. The interface filter resolution is dynamic throughout a stream's lifetime: it is re-evaluated on each DAR upload. Note: No redaction of history: a DAR upload during an ongoing stream does not affect the already scanned ledger for the respective stream. If clients are interested in re-reading the history in light of the upgrades introduced by a DAR upload, the relevant portion of the ACS view of the client should be rebuilt by re-subscribing to the ACS stream and continuing from there with an update subscription for the interesting interface filter.

- Dynamic upgrading of interface views: rendering of interface view values is done using the latest (highest semver) package version of the template implementing an interface instance. Packages vetted with validUntil are excluded from the selection. Note: The selected version to be rendered does not change once stream is started, even if the vetting state evolves.

Smart Contract Upgrade (SCU)

SCU allows Daml models (packages in DAR files) to be updated transparently. For example, you can fix a Daml model's bug by uploading the DAR that has the fix in a new version of the package. It was introduced in Daml v2.9.1 and is now also available in Daml 3.3.

You may need to adjust your development practice to ensure package versions are set following a semantic versioning approach. To prevent unexpected behavior, this feature enforces that a DAR being uploaded to a participant node has a unique package name and version, and that packages with the same name are upgrade-compatible. So it is not possible to upload multiple DARs with the same package name and version. Please ensure you are setting the package version in the daml.yaml files and increasing the version number as new versions are developed.

The 3.x documentation for SCU is in preparation but not yet available. However, the documentation from 2.x is largely applicable and available here with the reference documentation available here; please ignore the protocol version and the Daml-LF version details.

Participant Query Store

The Participant Query Store (PQS) is compatible with both Daml 2 and Daml 3. The only changes needed are those due to type changes in the Ledger API.

The detailed changes are as follows:

- Users of the following columns from the SQL functions in general (creates, archives, exercises) must use the new tuple type (offset::bigint, node_id::int):

- event_id, create_event_id, archive_event_id, exercise_event_id

- These columns interpret the offset as an integer:

- offset, created_at_offset, archived_at_offset, exercised_at_offset

- offset is an integer in these functions:

- validate_offset_exists, validate_pruning_offset, validate_reset_offset, reset_to_offset, prune_to_offset, summary_active, creates (optional parameters), archives (optional parameters), exercises (optional parameters)

- PQS needs to be instructed to use the "daml3.3" Ledger API using the command line:

- Container: $ docker run -it --workdir /daml3.3 digitalasset-docker.jfrog.io/participant-query-store:x.x.x --version

- JAR: use the corresponding .jar daml3.3/scribe.jar

- Users making use of event redaction feature must use the use the new event_id type (also returned by the Read API) comprising of a tuple of integers (offset, node_id): e.g. SELECT redact_exercise((100, 1), '<redaction_id>');

We recommend displaying the event identifying information as <offset>:<node_id>.

This simple Java client is an example of the usage.

Daml Script

Daml Script has the following changes:

- allocatePartyWithHint has been deprecated and replaced with allocatePartyByHint. Parties can no longer have a display name that differs from the PartyHint.

- daml-script is now a utility package: the datatypes it defines cannot be used as template or choice parameters, and it is transparent to upgrades. It is strongly discouraged to upload daml-script to a production ledger but this change ensures that it is harmless.

- The daml-script library in Daml 3.3 is Daml 3’s name for daml-script-lts in Canton 2.10 (renamed from daml3-script in 3.2). The old daml-script library of Canton 3.2 no longer exists.

Other Changes

- The argument to SubmitAndWaitForTransaction call has been changed from SubmitAndWaitRequest to SubmitAndWaitForTransactionRequest.

- Ledger API now gives the DAML_FAILURE error instead of the UNHANDLED_EXCEPTION` error when exceptions are thrown and not caught in the Daml code.

- The ACS export now requires a ledger offset for taking the ACS snapshot, instead of an optional timestamp. The new ACS export does not feature an offboarding flag anymore; offboarding is not ready for production use and will be addressed in a future release.

- Ledger Metering has been removed. This involved:

- deleting MeteringReportService in the Ledger API

- deleting /v2/metering endpoint in the JSON API

- deleting the console ledger_api.metering.get_report command

- The package vetting ledger-effective-time boundaries changed to validFrom being inclusive and validUntil being exclusive whereas previously validFrom was exclusive and validUntil was inclusive.

- Changed the endpoint PackageService.UploadDar to accept a list of dars that can be uploaded and vetted together. The same change is also represented in the ParticipantAdminCommands.Package.UploadDar.

- Renamed configuration parameter session-key-cache-config to session-encryption-key-cache.

- sequencer_authentication_service returns gRPC errors instead of a dedicated failure message with status OK.

- display_name is no longer a part of Party data, so is removed from party allocation and update requests in the Ledger API and daml script

- PartyNameManagement service was removed from the Admin API

- Renamed request/response protobuf messages of the inspection, pruning, resource management services from Endpoint.Request to EndpointRequest and respectively for the response types.

- The Daml Compiler flags bad-{exceptions,interface-instances} and warn-large-tuples no longer exist

- Daml assistant has two changes. The daml package and daml merge-dars commands no longer exist. The daml start command no longer supports hot reload as this feature is incompatible with Smart Contract Upgrades.

- Typescript code generation no longer emits code for utility packages.

Updates Needed before Splice 0.5.0

This section describes the API features that are deprecated in Splice 0.4.0 and will require application changes before running on a network upgraded to Splice 0.5.0 slated to be released in late 2025. Additional blogs will provide more detailed migration information. Please monitor the #gsf-global-synchronizer-appdev channel for the announcements.

Canton JSON Ledger API v1 is Deprecated by Canton JSON API v2

As mentioned, JSON Ledger API v1 is deprecated in this release and will be removed in Splice 0.5.0/Daml 3.4. So, applications need to migrate to JSON Ledger API v2 which is available in Splice 0.4.0/Daml 3.3.

Please note that JSON API v2 does not support query-by-attribute capabilities currently offered by JSON API v1. These queries have proven problematic and are no longer supported. The LAPI pointwise query APIs can be used. For more general querying capabilities, it is recommended to use the Participant Query Store (PQS).

The migration details are available in the "HTTP JSON API Migration to V2 guide". Please note that including @daml/ledger will not work for V2 because it is for Canton JSON API V1.

Identifier Addressing by-package-name

In Daml 3.3, Smart Contract Upgrade supported two formats for specifying interface and template identifiers to the Ledger API. They are:

- package-name reference format which uses the package name as the root identifier, such as #<package-name>:<module>:<entity>. This was introduced with SCU.

- package-id reference format which uses the package-id that has the format <package-id>:<module>:<entity>.

The package-id reference format will not be supported in Splice 0.5.0. So applications must switch to using the package-name reference format for all requests submitted to the Ledger API (commands and queries).

Universal Streams

The above section Universal Event Streams introduced this new feature and describes the minimal changes that are needed to work in Splice 0.4.0. To work in Splice 0.5.0, further application updates are needed because the deprecated APIs will be removed. The heading “Changes Required before the Next Major Release” in the section Universal Streams has the migration details.

Java Bindings¶

The java-binding classes that wrap the deprecated Ledger API messages will be removed in the next major release:

- GetTransactionByIdRequest

- GetTransactionByOffsetRequest

- GetTransactionResponse

- GetUpdateTreesResponse

- SubmitAndWaitForTransactionTreeResponse

- TransactionFilter

- TransactionTree

- TransactionTreeUtils

- TreeEvent

- etc.

You must thus remove your use of these deprecated classes to be ready to adopt the Splice 0.5.0 release.

Use Canton's Error Description instead of Daml Exceptions

Canton has a standard model for describing errors which is based on the standard gRPC description. The use of standardized LAPI error responses "enable API clients to construct centralized common error handling logic. This common logic simplifies API client applications and eliminates the need for cumbersome custom error handling code."

In Daml 3.2 there are two error handling systems:

- The Daml level: Daml exceptions within the Daml code: user defined exception types are thrown (via the the throw keyword), and caught using try/catch.

- The LAPI client level: It uses Canton's standard model mentioned above.

Daml exceptions are deprecated in Daml 3.3 so please consider migrating away from them.

Daml 3.3 introduces the failWithStatus Daml method so user defined Daml errors can be directly created and passed to the ledger client. The Ledger API client then can inspect and handle errors raised by Daml code and Canton in the same fashion. This approach has several benefits for applications:

- It makes it easy to raise the same Daml error from different implementations of the same interface (e.g. the CN token standard APIs).

- It integrates cleanly into the LAPI client since Canton's error-ids are generated within the Daml code and can extend a subset of these ids.

- Canton will also map all internal exceptions into a FailureStatus error message so that the ledger client can handle them uniformly.

- Avoids the parsing to convert Daml User Exceptions into an informative client side error response. This is simpler and more straightforward.

- Provides support for passing error specific metadata in a unified way back to clients.

An example will help to make this concrete. Consider the following daml exception:

exception InsufficientFunds with

required : Int

provided : Int

where

message “Insufficient funds! Needed “ + show required + “ but only got “ + show provided

This would have been received by a ledger client as:

Status(

code = FAILED_PRECONDITION, // The Grpc Status code

message = "UNHANDLED_EXCEPTION(9,...): Interpretation error: Error: Unhandled Daml exception: App.Exceptions:InsufficientFunds@...{ required = 10000, provided = 7000}",

details = List(

ErrorInfoDetail(

// The canton error ID

errorCodeId = "UNHANDLED_EXCEPTION",

metadata = Map(

participant -> "...",

// The canton error category

category -> InvalidGivenCurrentSystemStateOther,

tid = "...",

definite_answer = false,

commands = ...

)

),

RequestInfo(

correlationId = "..."

),

ResourceInfo(

typ = "EXCEPTION_TYPE",

// The daml exception type name

name = "<pkgId>:App.Exceptions:InsufficientFunds"

),

ResourceInfo(

typ = "EXCEPTION_VALUE",

// The InsufficientFunds record, with “required” and “provided” fields

name = "<LF-Value>"

),

)

)

Now it will be received by the client as:

Status(

code = 9, // FAILED_PRECONDITION

message = "DAML_FAILURE(9, ...): UNHANDLED_EXCEPTION/App.Exceptions:InsufficientFunds: Insufficient funds! Needed 10000 but only got 7000",

details = List(

ErrorInfoDetail(

errorCodeId = "DAML_FAILURE",

metadata = Map(

"error_id" -> "UNHANDLED_EXCEPTION/App.Exceptions:InsufficientFunds"

"category" -> "InvalidGivenCurrentSystemStateOther",

... other canton error metadata ...

)),

...

)

)

by Curtis Hrischuk

May 14, 2025

by Curtis Hrischuk

May 14, 2025

by Curtis Hrischuk

May 14, 2025

by Curtis Hrischuk

May 14, 2025

by Curtis Hrischuk

May 14, 2025

by Curtis Hrischuk

May 14, 2025