A recent study showed that almost 40% of the AI, analytics and data management investments by an organization go towards aggregating data from multiple internal and external source systems, reconciling inconsistencies, and creating a clean golden source of information. In addition, a significant budget is allocated for IT and operational customer service spending to mitigate and address data quality issues due to inconsistent data silos.

Given the importance of AI in today’s data driven digital economy, these challenges with data management inhibit agility and slow down insights driven innovation. In addition, poor data quality can lead to significant overhead in meeting compliance needs and regulatory reporting.

In this blog post, we will first review the traditional approach to data management, and then outline a new approach to bridge data silos using smart contracts. We believe this can be a useful addition to an organization’s data science toolkit to address this data management problem and achieve a clean source of data to drive AI and analytics initiatives. We will also introduce how this has been achieved in practice using our enterprise integration solution Gen.Flow which uses Daml smart contracts and allows all digital applications to operate using a golden source for both business processes as well as data silos. Special thanks to Manish Grover from Digital Asset for his insights.

How do current data management approaches work?

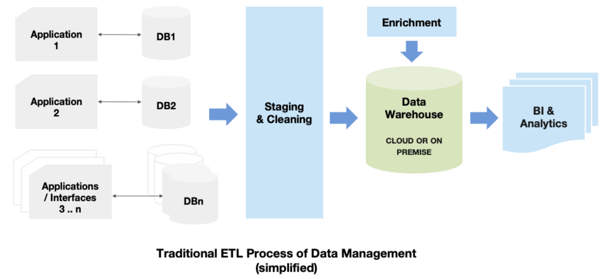

Typically, an enterprise business process is executed by multiple applications that work with each other to take actions and move the workflow forward. Each of these applications may have their own application databases (aka source systems), and would pass data back and forth to keep these data silos in sync with each other.

For example, a credit card business process may consist of a new card origination system that creates a new credit card, followed by business applications that then handle transactions processing, fraud detection, loyalty management, e-statements, and customer service. These individual applications may be a combination of home-grown or externally sourced technologies, and they may be added at different times for different geographies.

The complexity and business need driven evolution of such an applications landscape does not allow an enterprise to maintain a single, tightly controlled monolithic system. As a result, multiple applications exist, often with their own separate databases, and are synced with each other through enterprise grade mechanisms. Common approaches to achieve these process and data management objectives are middleware enabled hub and spoke architectures, BPM systems that connect applications, API exchanges, and plain vanilla batch file transfers

As the business process is executed (as in the above scenario), various analytic demands must also be satisfied. For example, the marketing organization may need insights to perform targeting and segmentation analytics so they can optimize upcoming customer campaigns.

So in order to serve these business reporting and analytics needs, the traditional approach has been to aggregate the data from various source systems, clean and reconcile inconsistencies, and then load it into a central enterprise data warehouse. The extent of this central data store may vary by enterprise. The golden source of truth is still the individual applications that own the business process. Many enterprises have multiple such warehouses (e.g. typically for marketing and compliance), while other enterprises create specific data marts off a central warehouse. As a result, tracking a data inconsistency back to the system that owns that data is complex to say the least.

It is natural that significant effort is expended in staging, reconciling and aggregating data. The bigger the organization, the more source systems and application silos exist, and the overall data management infrastructure becomes even more complex. Challenges arise in business innovation due to the complexity of integrating additional services.

Finally, there is the issue of access control and compliance. Not everyone must get the same level of access to information, and every step in the business process must be reported and audited for compliance and regulatory purposes. For example, anti-money laundering and audit are common use cases.

So, what can be done to solve this data management problem? How can these multiple application data stores be bridged to produce a clean version of data without having to constantly aggregate and reconcile these source systems?

How Smart Contracts Can Enhance Traditional Data Management

Smart contracts bring two fundamental changes to the way we look at our business information and processes.

- The first is that any business action causes a change to underlying data in a deterministic manner. That means we can now think of key entities in terms of “digital assets” on which actions are taken. For example, a credit card issued to a customer is a digital asset, on which a fraud flag is raised, a payment is made, a campaign is run etc.

- Second, the entire business process of an enterprise now takes tangible form instead of being buried in multiple flow charts and documents. As any enterprise technology practitioner knows, documentation is out of date as soon as it is produced. With smart contracts, your business process is codified easily into functioning software which is then maintained as often as you make a change, and governed as the organizational hierarchy demands .

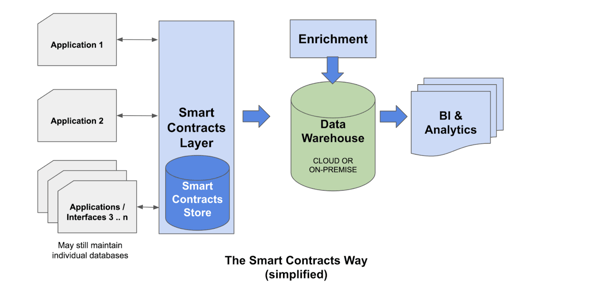

In addition, a smart contract-based applications landscape enjoys a single version of truth in the form of the contracts store. This source of data does not need to be reconciled or aggregated post-facto, but is automatically created as business processes execute over time.

In addition, data no longer just works as a static record driven by applications. It can itself trigger events and drive processes forward when it is changed, regardless of what is set up on the application layers. For example, a fraud alert being raised on a credit card, can trigger an action on the payments receivables system to alter when a payment is due. New views or data marts can be created off the central smart contracts store.

So, we transform the challenge of post-facto aggregation of data, to a problem of post-facto distribution of data to those who need it.

The Smart Contracts Approach in Practice: Introducing Gen.Flow

At this point it is important to note that while smart contracts and Distributed Ledger Technologies (DLT) are complex to architect and implement, there is a key difference between the two.

- Smart contracts (specifically Daml smart contracts) do not need a blockchain to run. Instead they can utilize traditional data stores. Thus they can be adopted by any institution looking to harmonize processes and data, regardless of their technical landscape.

- Blockchains and distributed ledgers on the other hand, are indeed a medium-term bet. Most organizations do not have incentives to adopt DLT and blockchain for internal use cases. Some of the most common disincentives are the complexity of consensus protocols, infrastructure management overhead of nodes and security keys, performance bottlenecks and potentially high cost of operations. Consortia and shared-services providers can definitely benefit from modern mutualized ledgers because multiple organizations are involved and require a high-trust threshold.

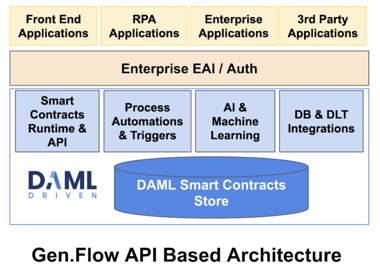

Based on this new approach using smart contracts, we have created Gen.Flow, a smart contract-driven orchestration layer that uses Daml smart contracts and is hence persistence layer agnostic (can run on both blockchains and databases). This approach mitigates the data management problem, reduces the complexity of adoption and accelerates the time-to-market for AI and analytics programs by bridging data silos without requiring constant reconciliation.

Gen.Flow is the culmination of our efforts to help solve some of the key challenges that organizations have shared with us. By using Daml, Gen.Flow is also capable of integrating seamlessly with an external DLT network.

Some interesting points to note:

- Gen.Flow’s smart contracts-based approach works along with your BPM or EAI based architectures. The BPM or EAI layer invokes the Gen.Flow API exposed by smart contracts.

- In addition, a unified API interface allows for organizations using Robotic Process Automation to integrate robots with smart contracts to unlock next generation intelligent automations and industrial savings. For example, a robot may need to create an audit trail, or access the status of a fraud flag before closing a case opened by the contact center. By integrating RPA with smart contracts, the robots can become more intelligent and also create a ready-made audit and compliance trail.

- The architecture allows organizations to abstract technical complexities of blockchains and smart contracts so they can focus on developing process-driven applications

In order to augment AI and automation capabilities, organizations can now pair smart contracts-enabled technologies with Artificial Intelligence and Machine Learning applications. The general idea is that smart contracts can be used to orchestrate processes and transactions closer to both business rules and core data, thus harmonizing the underlying information to create a golden source of truth. Robots can more seamlessly connect to core data and orchestration workflows. Artificial Intelligence and Machine Learning applications can leverage smart contracts and harmonized data to further extend analytics capabilities – we call this full-stack automation.

Gen.Flow leverages smart contracts as a process-driven layer of applications that is more abstracted from technology and organizational constraints, and more in sync with the business information flow itself. Smart contract applications closely emulate and help centralize business rules.

In Summary

The potential benefits of smart contract applications to create automated and intelligent digital capabilities are becoming clearer and more appealing to organizations. They allow organizations to leverage functionalities such as non-repudiation and atomic transactions, process-driven development, seamless multi-party integration and higher abstraction from technical constraints. This consists of approaching automation from a business and process-driven perspective.

In our view, a smart contracts based integration layer across applications is a powerful addition to the enterprise data management toolkit. It accelerates digital and insights driven business transformation, and unlocks a variety of operational benefits.

Daml has also a new learn section where you can begin to code online:

Learn Daml online

by Guido Santos

May 26, 2020

by Guido Santos

May 26, 2020

by Guido Santos

May 26, 2020

by Guido Santos

May 26, 2020

by Guido Santos

May 26, 2020

by Guido Santos

May 26, 2020