Daml helps preserve the confidentiality of sensitive DLT contractual information

Distributed Ledger Technology (DLT) promises to break down information silos between mutually distrusting parties while maintaining integrity, availability, and privacy. In “Trust but verify” is a valuable DLT model — does your language support it?, Alex and Ratko showed how Digital Asset’s contract modeling language, Daml, is designed to help developers streamline multi-party business flows — thus improving availability in a set of market infrastructures. Daml similarly assists the developer in preserving privacy while maintaining the integrity of the ledger — and that will be the topic of this post. We start by defining what privacy means, and then show how some prominent DLT technologies stack up to this definition. Finally, we show how, thanks to Daml, the DA Platform achieves privacy without sacrificing integrity and compare our approach to other DLT technologies.

What is privacy in DLT?

DLT enables transactional processing of data that is distributed among participants. To define privacy, we require an underlying notion of a need to know basis, which tells us which participants are entitled to see what data. We can then say that a DLT system preserves privacy if data is shared only with those participants who are entitled to see it per that need to know basis.

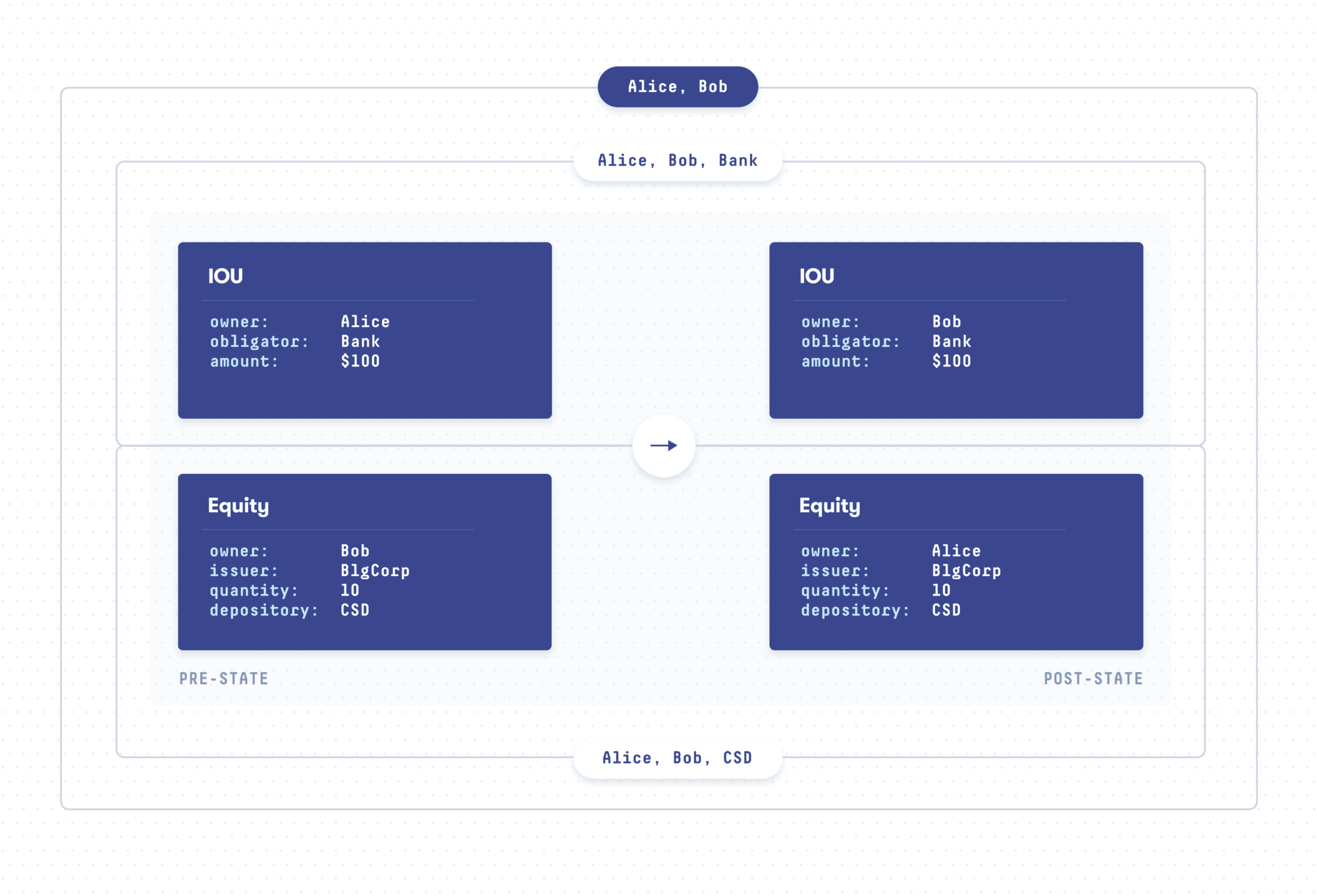

For an example from the financial domain, consider a simple Delivery vs Payment (DvP) transaction. Alice transfers some amount of funds that she holds in a bank in exchange for some of Bob’s BigCorp shares, held at a Central Securities Depository (CSD). We can visualize such a transaction as follows:

Intuitively, we expect that Alice and Bob are entitled to all the data in this transaction. The bank is entitled to see that money has been moved from Alice to Bob, such that it knows whose account currently holds the money; the same holds for the CSD and the shares. The bank, however, does not need to know what Alice spent her money on.

Privacy and integrity are both critical properties of DLT — so how can ledger participants be convinced of the integrity of the system when transactions are only partially and selectively revealed to them? Let’s examine some techniques being used that attempt to achieve privacy while preserving integrity in DLT systems.

Public (permissionless) ledgers

The DLT field can trace its roots back to DigiCash in the 1980s. It was however the invention of Bitcoin, first described in a paper published in 2008 by the pseudonymous Satoshi Nakamoto, that sparked a new wave of interest. Bitcoin enables the transfer of value without trusted central parties and relies on a public shared log of transactions to ensure integrity. Since the log is public, all transfers of value are publicly disclosed to all network participants. This clearly violates the need to know principle and, as stated in the Nakamoto paper, is intentionally in direct contrast to the traditional banking model — which “achieves a level of privacy by limiting access to information to the parties involved and the trusted third party.” The public log approach to maintaining integrity is used in many DLT systems, and several techniques for data obfuscation are being used to inject some level of privacy into these systems.

Privacy through pseudonyms. Bitcoin keeps the ownership of accounts pseudonymous, so that “the public can see that someone is sending an amount to someone else, but without information linking the transaction to anyone”. Even though Bitcoin focuses exclusively on the transfer of the underlying cryptocurrency and has deliberately limited support for smart contracts, the general idea can be illustrated in our DvP example (indeed, pseudonymization is also used in systems with more flexible smart contract capabilities, such as Ethereum). The pseudonymized transaction looks as follows:

Alice and Bob can choose to share some data out-of-band to let each other know who is behind the pseudonyms. The other participants cannot resolve the pseudonyms, but they see all the transaction details. This runs contrary to many regulations, which require assurances that transaction details are kept private to only those parties engaged in the transaction. Furthermore, while pseudonymization has worked well so far to protect the real identity of Satoshi Nakamoto, research shows that it is less successful in protecting Bitcoin participants. The public logs leak a substantial amount of data about relations between transactions, and it is actually not all that difficult to link individuals with their Bitcoin transactions.

Privacy through encryption. In this approach, encryption is used to selectively “redact” parts of the data from the public log. For example, redacting the amounts in our DvP example would yield the following log entry:

While this approach still violates the need to know principle as most data is shared among all participants, encryption can still provide some amount of privacy. Encryption can be combined with the pseudonymization of identities to further enhance privacy. However, there are many caveats. First, encryption poses a challenge for integrity, as the changes to encrypted data are not verifiable by the public. Furthermore, such encryption schemes are typically not resilient against weaknesses in the underlying cryptography: should the cryptography become broken in the future, privacy is lost. Thus, many jurisdictions maintain data domicile laws, requiring that data relating to their constituents not be shared among outside entities — even if encrypted. Taken together, these drawbacks make selective encryption very unattractive as the basis for a DLT to be used in regulated markets.

Privacy through zero-knowledge proofs. Zero-knowledge proofs (ZKPs) are a novel approach to privacy in DLT, whose use was pioneered by Zcash. In this scheme, the public log does not contain the transaction data. Instead, it contains a so-called commitment to this data, together with a correctness proof for a transaction without revealing its contents — decoupling privacy from integrity. The full transaction contents can then be shared privately on a need to know basis while the ZKPs in the public log give integrity guarantees to all DLT participants.

The main drawback of ZKP schemes is that they are currently computationally expensive. Even within Zcash, empirical analysis shows that the high computation cost is incentivizing poor practices which currently reduce privacy. There are exciting efficiency improvements underway in zero-knowledge proof construction. Many such efforts specifically target simple value transfers, such as Confidential Transactions and Bulletproofs, developed by Blockstream for the Bitcoin network, and Chain’s derivative Confidential Assets for use on their networks. But these are still early days; the computational cost is still prohibitive for most distributed applications, and bugs are still common. Furthermore, when bugs are discovered it is inherently impossible by design to triage them. At Digital Asset, we have deep expertise in this domain and will continue to monitor and assess these technologies as they mature.

Private (permissioned) ledgers

A more recent wave of DLT, targeted more specifically at regulated markets, uses private (permissioned) ledgers where one or more ledger operators act as gatekeepers to the system. This not only solves issues such as KYC and AML compliance problems that affect public ledgers but also allows for more comprehensive privacy solutions, in addition to those used by public ledgers.

Privacy through isolation. Within the permissioned ledger category, a popular approach to privacy is to keep data access limited to a subset of all ledger participants. An example is channels in Hyperledger Fabric. Within a channel, all transaction data is visible to all channel members, but not to anyone else on the network. Thus, Fabric channels provide privacy according to our definition only if the access to channels coincides with the need to know basis. In practice, this can lead to a proliferation of channels, which re-introduces the sort of data silos that DLT is designed to eliminate, and which can have a serious impact on scalability. To compensate for these limitations, Fabric 1.1 has introduced support for encryption. Furthermore, an upcoming version of Fabric will introduce private transactions, which redact the data inside a channel using hash commitments and stores the data in private, off-ledger side databases (’SideDB’). Lastly, Fabric is also investigating a form of ZKPs that they call Zero Knowledge Asset Transfer.

Corda also uses isolation, but on the level of transactions. Transactions in R3’s Corda platform atomically consume and produce multiple contracts; contract developers specify the set of parties who are privy to each transaction. Other parties — apart from notaries (trusted central entities that operate the Corda network) — receive no data about the transaction. For example, in Corda, our DvP transaction could only be shown to Alice, Bob, the bank, and the CSD. Alternatively, Corda can hide the entire transaction from the bank and the CSD until the assets are re-issued (a Corda technique) or settled, and settlement is a necessity for real-life assets. To support this mode of operation and still ensure integrity, Corda requires provenance proofs. With such proofs, Bob and Alice learn not only the details of their DvP transaction but also of all previous transactions that transferred the IOU, revealing all the previous (possibly pseudonymized) owners of the IOU to them.

Daml: defining the need to know

At Digital Asset, we engineered a permissioned ledger that is able to achieve all three stated goals of DLT: integrity, availability, and privacy on a strict need to know basis. A key enabler for reconciling these goals is our contract language, Daml. If you’ve been following this blog series, you may recall that in A new language for a new paradigm: smart contracts, Ben and Edward described that, in Daml, “parties and contracts are native constructs in the language”. Then, Martin and Jost carefully outlined how Daml explicitly models and tracks the flow of authorization between rights, obligations, and delegations of rights in their blog entry, The only valid smart contract is a voluntary one — easier said than done. Finally, Alex and Ratko examined how these Daml properties can be leveraged to provide integrity and availability in the DA platform. To achieve the final part of the DLT trifecta — privacy — the DA Platform takes a data isolation approach, ensuring that participants only receive data they are entitled to see. We next discuss the Daml properties that enable this isolation.

Automated, business-specific need to know

Ben and Edward labeled Daml as “a language of privacy” because “the right of a given party to view the details of a particular contract is a fundamental notion that can be reasoned about directly.” That is, Daml defines the need to know basis automatically, on a per-contract basis. This has profound implications for privacy and enables the DA Platform to deliver superior privacy characteristics without sacrificing integrity.

To explain why, let’s first describe how the need to know basis can be derived automatically. Daml transactions consume and produce contracts; becauseDaml was written to enable developers to express contracts, parties, rights, obligations, and authorization directly, the contracts in a transaction form a precise, business process-specific definition of who needs to see what data.The designated signatories of contracts and the parties authorizing the use of their rights need to see the changes to the contracts, and all the data required for validating such a change. The Daml system then automatically calculates which additional participants are authorized to see the details of each contract, and the DA Platform distributes the contract data only to authorized participants. Unauthorized participants never receive any details about the contracts, not even in an encrypted form.

For example, take a regulated market that operates under market-wide rules — what Alex and Ratko identified as Master Service Agreements, or MSAs. These market-wide rules can be encoded in Daml and shared with all market participants, but the actions taken by participants — and their data — are only shared with the relevant stakeholders as determined automatically by the market rules. No other smart contract language provides this capability.

In contrast to the DA Platform, Fabric and Corda rely on general purpose languages in order to take advantage of a large and established developer base. However, such languages provide no built-in notion of the need to know basis. In Fabric, this basis is specified by assigning parties to channels. This assignment is completely detached from the business processes and the contracts that expressed these processes. In Corda, the contract developer is responsible for ensuring that all the parties who need to know are in fact notified. This is an additional burden on the developer, and one consequence is that developer errors can leak data accidentally by disclosing too much information.

Furthermore, the opposite — disclosing too little information — is also a problem. For example, the owner of an American call option purchases the right to exercise that option and take delivery of the underlying asset prior to expiration. In such a scenario the seller of the option is not involved in the choice to exercise, and therefore not part of the authorization flow, but must nevertheless be notified that the buyer has exercised their right. Other platforms require the developer to manage these data flows, but because of the information provided by Daml, the DA Platform is able to couple the validity of a transaction to the proof that all stakeholders of this transactions have been properly informed. With the DA Platform, a transaction that fails to correctly notify all affected stakeholders will not be considered valid.

Fine-grained privacy

Daml pushes the state-of-the-art in privacy preservation to a whole new level by also being able to determine the need to know at a very fine-grained level. With Daml, transactions can be broken up into sub-transactions, and these sub-transactions then selectively revealed to participants. Returning to our DvP example, when written in Daml, the DA Platform would reveal the entire transaction only to Alice and Bob. The bank and the CSD would be notified that the cash and shares, respectively, got transferred, and they can verify that the transfers were correct. But they would not learn anything about why the transfers happened. We can visualize this below, where each box shows to whom the part of the transaction is shown:

Contrast this example with Corda, which specifies the need to know only on the level of transactions. In the DvP example with Corda, the bank learns what Alice paid for, and the CSD learns the price that Alice paid. This is a clear violation of Alice’s privacy, and can also be a violation of regulatory requirements in some cases. Corda recognizes the problem of fine-grained privacy and approaches it through transaction tear-offs. However, this approach has two limitations. First, Corda tear-offs can only hide data from so-called Oracles, who do not need to see any input or output data, but not from other stakeholders — as would be required in the example above. Second, this puts the onus on the contract developer to manually define the data hiding of every transaction. We believe this to be error prone, especially for the complex workflows of financial use cases.

In conclusion, with Daml we designed a business process modeling language that computes the need to know for all data, down to sub-transaction granularity. It does so in fully automated fashion, and independently of the underlying DLT architecture. The DA Platform was designed to leverage this information, and guarantee strong privacy on a strict need to know basis for its participants.

In these past few entries of the Daml blog series, the role of Daml in supporting the ledger’s ability to maintain availability, integrity, and privacy has been explored. The next entry will conclude this part of the discussion with a focus on Daml’s role in maintaining an immutable, incontrovertible evidentiary trail.

Read Part 7: Smart contract language: the real arbiter of truth?

New to this series about Daml? Click here to read from the beginning!

Join the community and download the Daml SDK.

This story was originally published 11 July 2018 on Medium

About the authors

Ognjen Maric, Ph.D., Formal Methods Engineer, Digital Asset

Ognjen joined Digital Asset’s language engineering team in mid-2017, and has been working on formal modeling of core Daml features and DA platform protocols.

Immediately before joining DA, Ognjen completed his Ph.D. at ETH Zurich, with a thesis on formal verification of fault-tolerant systems. His interests lie in the intersection of formal verification, distributed systems, and security protocols. Prior to the PhD, he worked as a freelance developer for several years.

Robin S. Krom, Ph.D., Senior Software Engineer, Digital Asset

Robin is a member of the language engineering team at Digital Asset concerned with developing and evolving the Daml language. He was also part of the team that modeled and then implemented the privacy mechanisms and integrity checks in the DA platform.

Prior to joining Digital Asset, Robin finished his Ph.D. in Mathematics at ETH Zurich, where he explored new ways towards proving the uniqueness of symplectic structures on closed 4-dimensional manifolds in a fixed cohomology class. He is very much interested in pure and applied mathematics, especially in computer science and loves coding in Haskell.

by Ognjen Maric

July 18, 2018

by Ognjen Maric

July 18, 2018

by Ognjen Maric

July 18, 2018

by Ognjen Maric

July 18, 2018

by Ognjen Maric

July 18, 2018

by Ognjen Maric

July 18, 2018